I could go to any number of Danish or American articles for this, but let’s take one NPR article as our crash test dummy because it’s the one that caught my eye:

Stanford Apologizes After Vaccine Allocation Leaves Out Nearly All Medical Residents, Laurel Wamsley, NPR.org, 18 December

It’s a simple story: the leadership of Stanford Health Care and Stanford School of Medicine had to prioritize distribution of the first covid vaccinations among its various populations. They developed an algorithm to perform that task. As a result, their front-line medical residents ended up being prioritized at a level the residents considered inappropriate.

(We’ll set the delicacy of feeling to be found among Stanford’s medical residents aside as a topic for another day—and probably another writer.)

The article quotes from a letter signed by “a council composed of the chief residents” of Stanford Health Care and Stanford School of Medicine:

Many of us know senior faculty who have worked from home since the pandemic began in March 2020, with no in-person patient responsibilities, who were selected for vaccination. In the meantime, we residents and fellows strap on N95 masks for the tenth month of this pandemic without a transparent and clear plan for our protection in place. While leadership is pointing to an error in an algorithm meant to ensure equity and justice, our understanding is this error was identified on Tuesday and a decision was made not to revise the vaccine allocation scheme before its release today

(As an aside, by the way, note the use of Kamala Harris’s beloved term equity. It really is showing up more and more, just as I foretold.)

So apparently leadership’s defense was that they hadn’t done anything wrong: it had been an error in an algorithm that was “meant to ensure equity and justice.” And when the error was pointed out to them, they didn’t feel a need to do anything about it because apparently equity and justice were being served just fine.

According to an email sent by a chief resident to other residents, Stanford’s leaders explained that an algorithm was used to assign its first allotment of the vaccine. The algorithm was said to have prioritized those health care workers at highest risk for COVID infections, along with factors like age and the location or unit where they work in the hospital. Residents apparently did not have an assigned location, and along with their typically young age, they were dropped low on the priority list.

Stanford has not provided an answer to NPR’s request for an explanation of its process or its algorithm.

Algorithms get a lot of blame for a lot of stupidity these days, but blaming algorithms for bad outcomes is like blaming your alarm clock for going off at the wrong time.

An algorithm is just an ordered set of instructions, and those instructions don’t write themselves.

Let’s simplify things a little with a recipe for buttered toast:

- 1. Put two slices of bread in a toaster.

- 2. Turn the toaster on.

- 3. When the bread is toasted, remove it from the toaster.

- 4. Butter the toast.

- 5. Enjoy your delicious buttered toast.

That’s the “buttered toast algorithm.” Simple, right?

But just think how many things could go wrong with that algorithm: for example, no limit is placed on the size or type of bread to be placed in the toaster. No definition of “toasted” is provided. No specifics are provided with respect to the butter: both the quantity and the method of application are left wide open.

Hypothetically, someone following the recipe slavishly could slice a two-kilo loaf of Danish rye down the middle, cram both halves into a toaster, leave them in until they’d been reduced to carbon logs, then split each log down the middle and shove in a full stick of butter. They’d still be operating within the parameters of the recipe, but they’d have utterly failed at the task of producing what most of us call “buttered toast.”

That’s not their fault, or the algorithm’s. It’s exclusively the fault of the cook that wrote the recipe. (Sorry: algorithm.) It assumes too much and specifies too little.

The leadership at Stanford wasn’t going for anything as simple as buttered toast, however: they wanted a recipe for “equity and justice” in the distribution of a vaccine.

The results were considered inequitable and unjust by the poor souls who’d been assigned to the back of the proverbial bus, whose definitions of equity and justice probably meant “us first.”

It sounds as though the recipe hadn’t been very well thought out.

It’s as if someone had written a recipe for buttered toast that began “Put two cats in the blender,” then expressed surprise that the recipe didn’t produce two slices of buttered, toasted bread.

Either that or the recipe had been very well thought out, but leadership hadn’t anticipated any significant backlash from the residents.

In either case it was leadership, not the algorithm, that screwed things up for the residents.

“We tried to get the priorities right,” they could have said, “but we really messed up. We’re looking them over to see if we can’t do a little better.”

Who would have begrudged them that?

But they decided to blame the algorithm.

Stanford’s leadership here is no different from anyone else using an algorithm as an excuse, or a cudgel, or anything but what it actually is: an explicit set of instructions to complete a task.

The concept of getting bad outcomes from a bad process is succinctly expressed in the formulation GIGO: Garbage In, Garbage Out. Lousy recipes make lousy dishes, and even excellent recipes made by inattentive cooks, or with the wrong ingredients, can produce pretty lousy food.

Keep that mind when you see a headline like this:

Google blames algorithm for adding porn titles to train station search results (July 2020)

I doubt (but cannot rule out) that anyone wrote an algorithm saying, “When returning search results for queries about Metro North train stations along the Hudson Line, append all station names with pornographic statements.”

I won’t cite the specific pornographic statements here, because this is still just a wee little blog fighting for inclusion by the search engines, but if you follow the link you can see just how wildly pornographic the texts were. My point is that, regardless of their intentions, someone did write an algorithm that produced that result, because that’s the result that happened. The algorithm didn’t fail: its author did. And their failure was, as so many programming failures are, primarily a failure of imagination.

# # #

Several Halloweens ago I wanted to stream scary Halloween music into our carport for the benefit of our Trick-or-Treaters (technically they were Slik-eller-Ballade’ere, but never mind that). I’d been getting spammed by Spotify forever, so I decided I’d finally take advantage of their exciting one-month free trial to get access to what I was sure would be a huge library of scary Halloween tracks.

I was not mistaken. Within moments of signing up for my free trial, I was rocking the neighborhood with hair-raising music and sound-effects.

At the end of the night I closed the Spotify app and my free subscription expired without my ever having opened the app a second time.

Then the emails began: Spotify began trying to woo me back with album, artist, and playlist suggestions just for me!

It’s a good example of a bad algorithm.

The idea behind it is clear enough:

MARKETING DIRECTOR: “Let’s try to lure expired members back by getting them excited about all the music we’ve got that they’d surely love to have available for listening on demand!”

MARKETEER: “How do we know what they’d love?”

MARKETING DIRECTOR: “Just look at what they’ve listened to and then list things that are similar, or list stuff that other people who listened to the same stuff as them also liked.”

MARKETEER: “What if someone becomes a trial member on Halloween, streams a bunch of Halloween stuff, then never logs in again?”

MARKETING DIRECTOR: “Don’t be ridiculous.”

The problem is that, taken one by one, people are “ridiculous.” (Present company included.) Look at the people you grew up with: think of friends who went to the same school as you, sat in the same classes as you, lived in households similar to your own, and had interests similar to yours. You obviously had a great deal in common, which is probably how you became such close friends, but do you remember how passionately you disagreed about music, books, movies, and who you wanted to sleep with?

No amount of smoothing with aggregates will ever compensate for the wild disparities between us. You can target the middle of the bell curve all you want, and you can achieve considerable business success in doing so (he said with an air of experience), but you’ll also have to write off the trailing slopes on both sides of that curve.

A 2016 article in the Danish newspaper Information went into some depth on the subject of what algorithms could and could not do. Because it was written by a data specialist, I believe the article overestimated the actual value of algorithms (and was excessively optimistic about AI), but I think it hit some of the problems of our increasing reliance on algorithms spot on (my translation):

Algorithms create personally targeted content online every day with intelligent classifications and informational associations.

That’s essential for a positive user experience because the information flow online is massive. Algorithms curate the content so that we’re not burdened with having to prioritize and filter large amounts of information.

In 2015, a U.S. research team studied the extent to which Facebook users were aware of the impact of algorithms on content.

It turned out that over half (62.5 percent) of the respondents were not at all aware of the algorithms’ curation of the content on social media.

Facebook’s algorithms have the power to shape users’ experience and perception of the world, yet the majority of users are unaware of their existence.

The article is from 2016, remember, but even as recently as 2019 Pew Research found that 74% of American Facebook users didn’t even know the platform maintained lists of their interests and preferences, which suggests a level of technological naivete that’s troublesome at best, terrifying at worst.

Bear in mind that YouTube has announced that their own “algorithms” would remove users who posted content suggesting that the 2020 presidential election was rigged and that Donald Trump lost the election on account of voter fraud. Without getting into the question of whether or not there was any fraud at all, or whom it benefited, take these morsels together:

- Social media determine what their users do and don’t see in their feeds

- Social media companies have political agendas

- Most Americans don’t even realize their social media feeds are curated

In other words, without their knowing it, a lot of people’s perceptions are being at least partly shaped by technology services who are deliberately manipulating them.

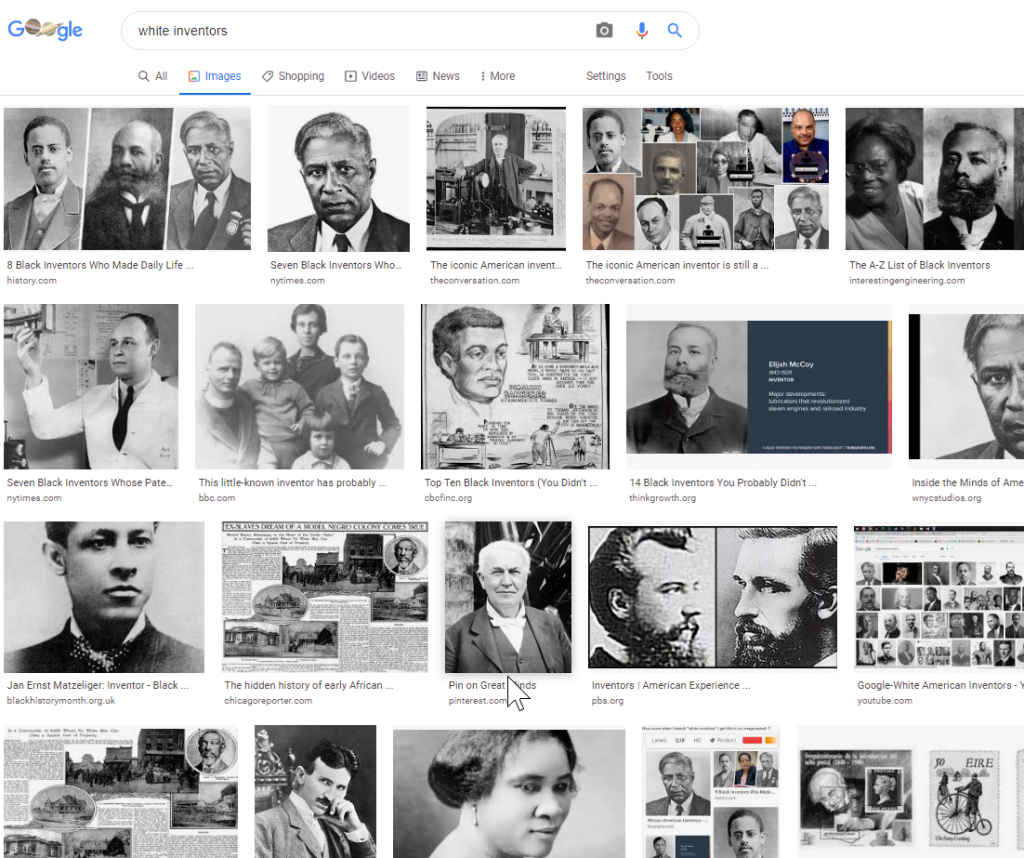

Which explains why the image search I just this moment conducted on Google for “white inventors” produced this result:

(Yes, I know I’ve used this example in the past, but it’s so extraordinarily weird that it’s become my go-to example.)

You may believe there’s some kind of social justice in presenting people looking for white inventors with pictures of mostly black inventors. Maybe there is. Maybe Google is providing a magnificent public service. But keep in mind that Google is doing it actively and consciously, and that you may not always agree with their particular social justice priorities.

That may not seem important on a stupid image search, but the residents of Stanford would, I’m sure, be happy to give you a quick and informative explanation of how “algorithms” can have serious real-life consequences.

In fact, one neurology resident does just that at the end of the NPR article:

“Algorithms are made by people and the results. . . were reviewed multiple times by people,” she wrote in an email to NPR. “The ones who ultimately approved the decisions are responsible. If this is an oversight, even if unintentional, it speaks volumes about how the front line staff and residents are perceived: an afterthought, only after we’ve protested. There’s an utter disconnect between the administrators and the front line workers. This is also reflective that no departmental chair or chief resident was involved in the decision making process.”

Just a shot in the dark here, but I’m guessing there are at least 74 million American voters who feel that there’s “an utter disconnect between the administrators” of social media and the conservative American public.

It’s a point Aske Mottelson made in his 2016 Information article (again, my translation):

But computer technology today is as much, yes, technology as it is a political and thus a democratic issue.

The social media and platforms of the internet are often hailed as an open public sphere for unrestrained democratic participation, but we also need to take a perspective on technology as a threat to our personal freedom.

Technology is as much a tool for democratic participation as an instrument for limiting and manipulating the same.

Today, the algorithm is such a pervasive component in the structuring of our everyday lives and the way reality is presented to us that it calls for a personal, democratic, and political stance.

I rarely find myself in agreement with anything published in Information, but Mottelson is entirely right here.

Google and Facebook are cooperating with the governments of countries like China and Vietnam not to broaden democratic debate, but to stifle and control it. Google and Facebook are therefore literally the enemies of freedom.

And we’re supposed to accept them here in the west as neutral arbiters of what may and may not be considered civil discourse?

In Basic Economics, Thomas Sowell defines economics as “the study of the use of scarce resources which have alternative uses.” He gives that definition up front, then throughout the rest of his book (which should be required reading for everyone) he uses that full definition everywhere one might otherwise expect to find the word economics. It’s a very clever device. It forces one actually to think about the use of scarce resources which have alternative uses in those particular contexts instead of simply letting one’s eyes pass lazily over the ambiguous word economics. It keeps one’s eye on the ball.

We ought to start doing that with algorithm. Every time you see the word, try substituting it for “a set of ordered instructions someone set up to accomplish a particular task.”

Because “leadership is pointing to an error in the set of ordered instructions they set up to achieve equity and justice in their vaccination priorities” gives a very clear picture of things.

“There was an error in the equity and justice algorithm” does not.